Overview

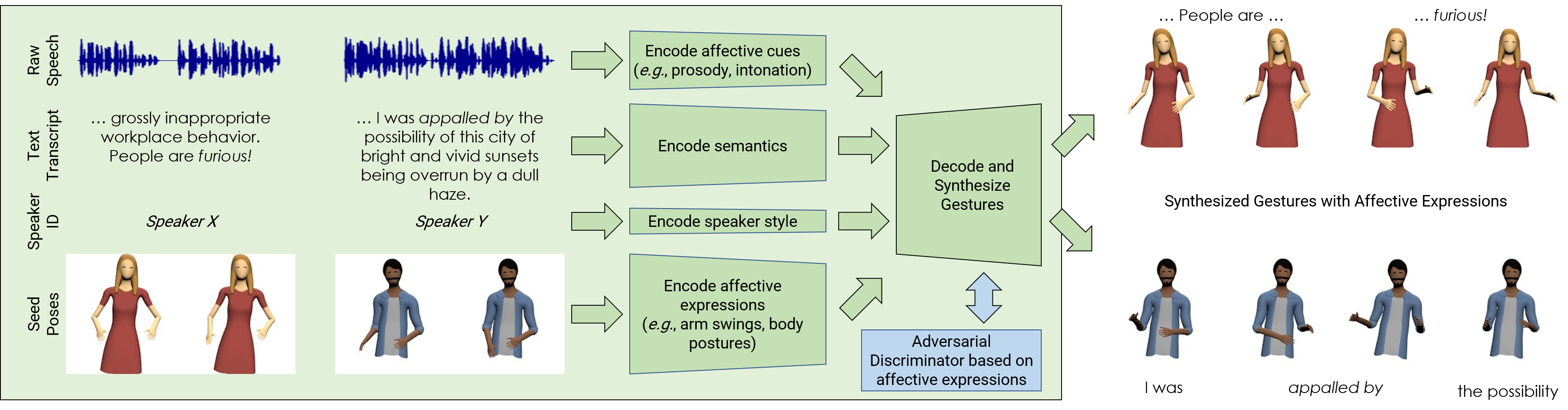

This area focuses primarily on generating virtual agents with appropriate emotional expressiveness for a variety of human-agent interactions in social contexts, such as co-habiting and navigating in the same space, conducting conversations, and engaging human audiences.